Building a Robust Quality Framework for Programmatic Ad Operations

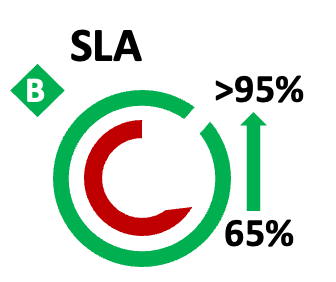

A case study showcasing how a trafficking process, previously grappling with serious quality concerns—including a staggering 25% error rate and only 75% SLA adherence—was transformed into a robust, scalable process with a strong quality framework.

BACKGROUND

The DSP trafficking team was responsible for setting up programmatic ad campaigns within stringent SLA timelines and accuracy standards. However, the team faced escalating quality challenges, reflected in: 🧪 Error Rate: 25% 🧪 SLA Adherence: 75% These issues affected client satisfaction, campaign performance, and internal rework efforts.

CHALLENGES

💬 No centralized QA structure or error categorization system. 💬 Manual tracking of trafficking requests led to missed tasks and poor prioritization. 💬 Shift patterns were not aligned with volume inflow. 💬 Absence of root cause visibility meant recurring issues were never systematically addressed.

STRATEGIC INTERVENTIONS

1️⃣ Design and Implementation of QA Framework ✅ Established a structured QA process with pre-defined quality checklists aligned to campaign objectives and platform specifications. ✅ Implemented two-stage QA: 👉 QA validation post set up by QA team. 👉 CM validation before campaign go-live.

2️⃣ Centralized Ticketing System ✅ Deployed a ticketing platform to log every trafficking request, capture timelines, assign ownership, and measure SLA compliance. ✅ Enabled prioritization, status visibility, and historical audit trails for every campaign task.

3️⃣ Dedicated QA Team Setup ✅ Formed a focused QA team trained in trafficking nuances and campaign standards. ✅ QA team validated campaigns post-go-live and fed results back into a learning system.

4️⃣ Shift Realignment Based on Volume Trends ✅ Used volume inflow analytics to restructure shifts, ensuring team availability during peak hours. ✅ Resulted in better resource utilization and reduced campaign backlog.

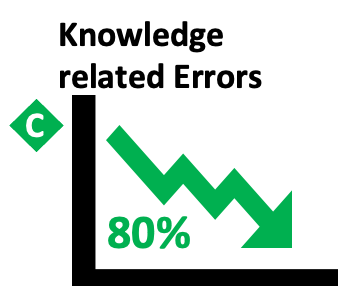

5️⃣ Root Cause Analysis (RCA) Process To eliminate recurring errors and ensure continuous improvement, a monthly RCA cadence was introduced:

Error Categorization: ✅ Human Errors: Execution mistakes due to carelessness, oversight, or fatigue. ✅ Knowledge Errors: Lack of understanding of process, tools, or platform requirements. ✅ System Errors: Platform/tool failures or process ambiguity. ✅ Process Gaps: Unclear SOPs or missing checks in the workflow.

RCA Methodology: ✅ All errors logged via QA were tagged and analysed weekly. ✅ A standardized RCA template captured the "what, why, and how" of each error. ✅ Action items were defined for each RCA bucket – training, process revision, or tool enhancement.

6️⃣ Knowledge Base Creation Developed a dynamic Knowledge Repository: ✅ Contained step-by-step guides, FAQs, platform updates, and annotated screenshots. ✅ Maintained a Frequently Made Errors log with corrective actions and examples. ✅ New hires and existing team members used this as a first line of resolution, reducing repeat errors and dependency on SMEs. The KB was updated monthly, driven by RCA outputs and QA feedback.

OUTCOMES

💡 Repeat errors started getting tracked and resolved via the knowledge base and retraining. 💡 Feedback Loop was Institutionalized via QA + RCA (Previously absent)

IMPACT

🎯 Achieved remarkable quality transformation within 2–3 months of framework implementation. 🎯 Improved client trust, reduced rework hours, and created a sustainable QA model. 🎯 The framework became a blueprint for other programmatic campaign operations within the organization.

SUCCESS FACTORS

💬 Cross-functional ownership between Ops, QA, Training, and Work Force Management. 💬 Strong RCA discipline and real-time corrective actions. 💬 High team engagement driven by visibility into impact and ongoing knowledge sharing.